Intro

Click here to view source code

Click here to interact with the site

Over the past several years I took a hiatus from updating this blog as I ventured away from WPF development and dove head first into web development, specifically Angular. Since then, my focus the last few years is working with React. Now seems like a good time to express some of the knowledge I came across through my travels learning and becoming comfortable with the language. Perhaps this information will help others as well.

Recently, I am working with Storybook to display a collection of React components to users. The framework provides a quick and elegant way to demonstrate an assortment of components and even allows for flexible interactivity by updating properties live. The Storybook team finally released version 7 of their framework by announcing it at their first ever conference. This is their first major release for 2.5 years and the features they provide shows. Unfortunately, that includes a plethora of breaking changes. Most notably, the changes that occur from Storybook upgrading from MDX v1 to v2. I was already familiar with some of these issues by attempting to include a change log tab for each component. Although Storybook is moving away from permanent tabs at the top of canvas panes, the framework made it difficult to allow for custom components in customized tabs. I will describe the steps taken to get this working, which involves leveraging MDX v2.

Create Add-on Tab

The first steps we need to do is create the infrastructure for displaying a tab. Storybook has a good write up about how to do this. Below is a quick summarization:

- Install the

react, typescript,react-dom,@babel/clipackages - Create a

.babelrc.jsfile and include the presets@babel/preset-env and @babel/preset-react

module.exports = {

presets: ['@babel/preset-env', '@babel/preset-typescript', '@babel/preset-react'

],

env: {

esm: {

presets: [

[

'@babel/preset-env',

{

modules: false

}

]

]

}

}

};

- Add scripts for building storybook files and individual components

{

"scripts": {

"build": "yarn build:components && yarn build:storybook:babel && yarn build:storybook:tsc",

"build:components": "rm -rf ./components/**/build && tsc -b",

"build:storybook:babel": "rm -rf dist/storybook/esm && babel ./src/storybook -d ./dist/storybook/esm --env-name esm --extensions \".tsx\"",

"build:storybook:tsc": "rm -rf dist/storybook/tsc && tsc --project ./src/storybook"

}

}

- Add a

manager.tsxfile to register a new addon tab

import React from 'react';

import { addons, types } from '@storybook/addons';

addons.register('change-log', () => {

addons.add('change-log', {

type: types.TAB,

title: 'Change Log',

route: ({ storyId, refId }) => {

return `/change-log/${storyId}`;

},

match: ({ viewMode }) => viewMode === 'change-log',

render: () => <div>Our new tab contents!</div>

});

});

- Add a

preset.jsfile to include the results from the babel build

function managerEntries(entry = []) {

return [...entry, require.resolve('../dist/storybook/esm/manager')];

}

module.exports = {

managerEntries

};

- Include our preset inside the

.storybook/main.jsfile

module.exports = {

"stories": [

"../stories/**/*.stories.mdx",

"../stories/**/*.stories.@(js|jsx|ts|tsx)"

],

"addons": [

"@storybook/addon-links",

"@storybook/addon-essentials",

"@storybook/addon-interactions",

"../src/storybook/preset.js"

],

"framework": "@storybook/react"

}

Dynamically Read Tabs

Now we have a Change Log tab next the Canvas and Docs tab. Next we want to display change log information based on the currently selected component. We start by creating a React component to handle displaying the tab.

import React, { FC } from 'react';

export const ChangeLogReader: FC<any> = () => {

return <>Change Log Reader Custom Component</>;

};

ChangeLogReader.displayName = 'ChangeLogReader';

export default ChangeLogReader;

As the name implies, this component is going to dynamically parse through our components and find any that have a *.change-log.mdx file and load its contents to the screen when the user selects the `Change Log` tab. In order to do that, we need to leverage a webpack loader to read its contents. As a first attempt, the `raw-loader` allows for this ability. First, we will retrieve the name of the component using the Storybook api hook. Inside our reader component, we will use the parsed out component name taken from the storyId. The full file path is needed since webpack runs a static analysis over files so restricts dynamic imports to only known file paths.

import { useStorybookState } from '@storybook/api';

import React, { FC, useEffect, useState } from 'react';

export const ChangeLogReader: FC = ({ componentName }: ChangeLogReaderProps) => {

const [changeLog, setChangeLog] = useState(undefined as any);

const state = useStorybookState();

useEffect(() => {

const componentName = getComponentName();

if (!componentName) {

setChangeLog(undefined);

return;

}

try {

const changeLogModule = require(`!!raw-loader!../../../components/${componentName}${componentName}.change-log.mdx`);

setChangeLog(changeLogModule?.default);

} catch (err) {

setChangeLog(undefined);

}

}, [state.storyId, state.viewMode]);

const getComponentName = () => {

const id = new String(state.storyId);

if (id.startsWith('components')) {

const splitStoryName = id.split('--');

splitStoryName.pop();

const splitComponentName = splitStoryName[0].split('-');

splitComponentName.shift();

return splitComponentName.join(' ');

} else {

return String(undefined);

}

};

return (

<div

style={{

display: 'flex',

padding: '12px 20px',

backgroundColor: 'white',

height: '100%'

}}

>

<div style={{ width: '100%', maxWidth: '1000px', whiteSpace: 'pre-line' }}>{changeLog}</div>

</div>

);

};

ChangeLogReader.displayName = 'ChangeLogReader';

export default ChangeLogReader;

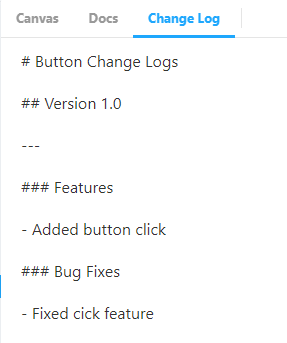

And now we are able to view the MDX file’s raw contents.

Loading Logs using MDX

- Since Storybook leverages mdx-js for loading MDX files, we are going to use the same loader to run through any change log files. In the ChangeLogReader, the

raw-loaderis replaced with@mdx-js/loader. Unfortunately, this is not enough as attempting to load the contents will run into unexpected token errors. The reason is because the output from the mdx-js loader needs transpiling so it can render as HTML. We can do this by prepending the loader withbabel-loader. The resulting module will provide an MDX function that will produce the file’s contents when it runs.

...

const changeLogModule = require(`!!babel-loader!@mdx-js/loader!../../../components/${componentName}/${componentName}.change-log.mdx`);

setChangeLog(changeLogModule?.default({}));

…

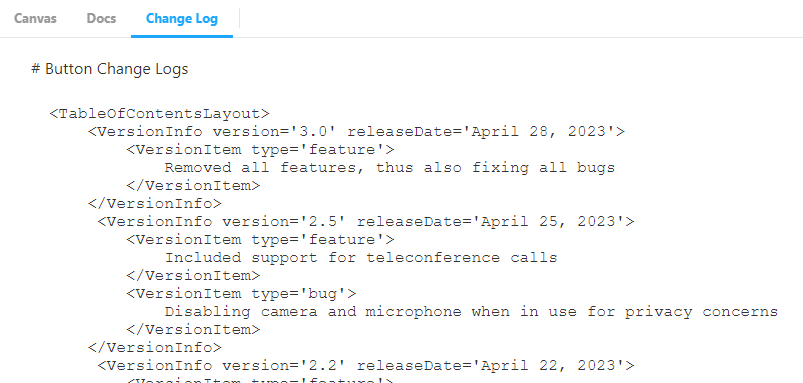

And now the MDX file can load dynamically with the correct formatting.

Unfortunately, the page will not parse jsx if the @mdx-js/loader is installed under version one. Let’s upgrade the loader so we can also allow custom components.

Using MDXv2

First, upgrade the @mdx-js/loader to version two. Next, we need to upgrade to webpack five along with adding storybook’s manager and builder to handle the new version of webpack:

yarn add webpack@5 @storybook/builder-webpack5@^6.5.16 @storybook/manager-webpack5@^6.5.16

Then we will update Storybook’s config to use the updated webpack in the main.js config file.

module.exports = {

...

core: {

builder: 'webpack5'

}

};

Since MDXv2 allows for rendering jsx components, we need to tell the dynamic loader which components to load. The components can get provided when executing the mdx file.

...

const components = {

CustomTheme: CustomTheme,

TableOfContentsLayout: TableOfContentsLayout,

VersionInfo: VersionInfo,

VersionItem: VersionItem

};

...

setChangeLog(changeLogModule?.default({ components: components }));

...

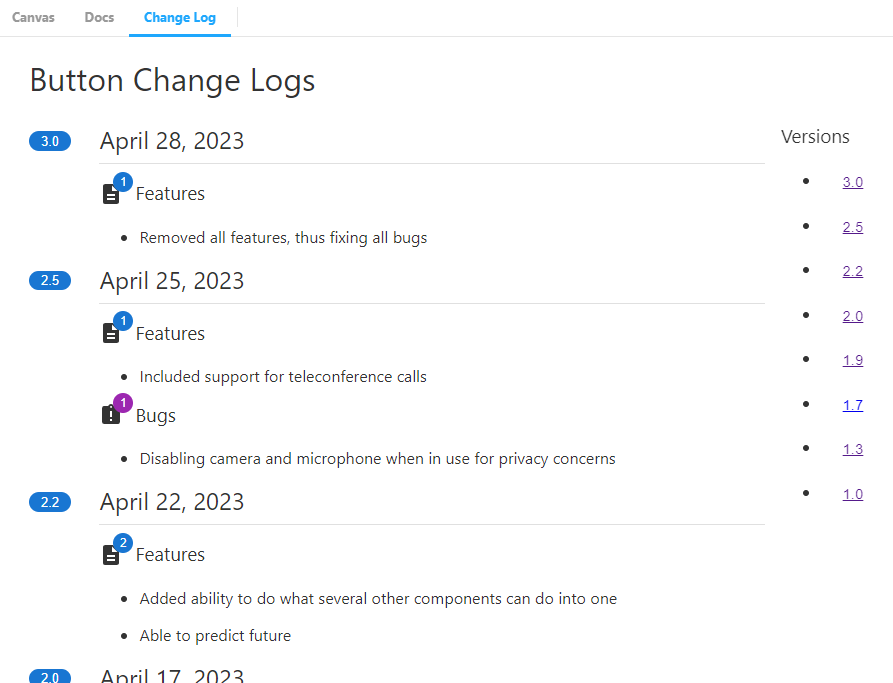

The other components are just helper components for displaying change logs. After running build and starting storybook again we can see the final result.

Conclusion

Storybook provides a lot of great features out of the box. It is a shame they decided to remove the tab feature at the top of each page, but I can understand given how much more flexible stories are with writing jsx.